Introduction to CNN Models - LeNet-5, AlexNet, VGG-16, Residual Networks

Definition

Convolutional Neural Networks (CNNs) are a class of deep neural networks designed specifically for processing structured grid data such as images. Key models in the evolution of CNNs include LeNet-5, AlexNet, VGG-16, and Residual Networks (ResNets), each contributing significant advancements in architecture, performance, and practical applications.

Key Concepts

- LeNet-5

- AlexNet

- VGG-16

- Residual Networks (ResNets)

- Convolutional Layers

- Pooling Layers

- Activation Functions

- Model Depth and Complexity

Detailed Explanation

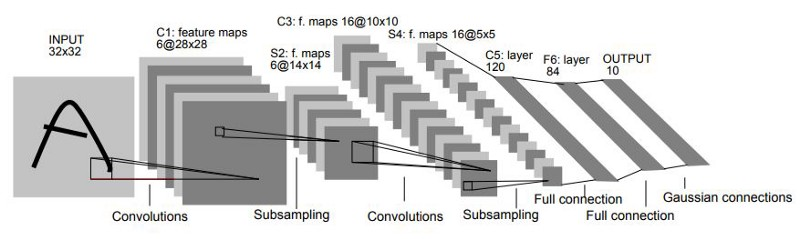

LeNet-5

- Developed By: Yann LeCun et al., 1998.

- Key Features:

- Architecture: Consists of 7 layers including 2 convolutional layers, 2 subsampling (pooling) layers, and 3 fully connected layers.

- Activation Function: Uses tanh activation function.

- Application: Originally designed for handwritten digit recognition (MNIST dataset).

- Significance: One of the earliest successful applications of CNNs, demonstrating the feasibility of deep learning for image processing tasks.

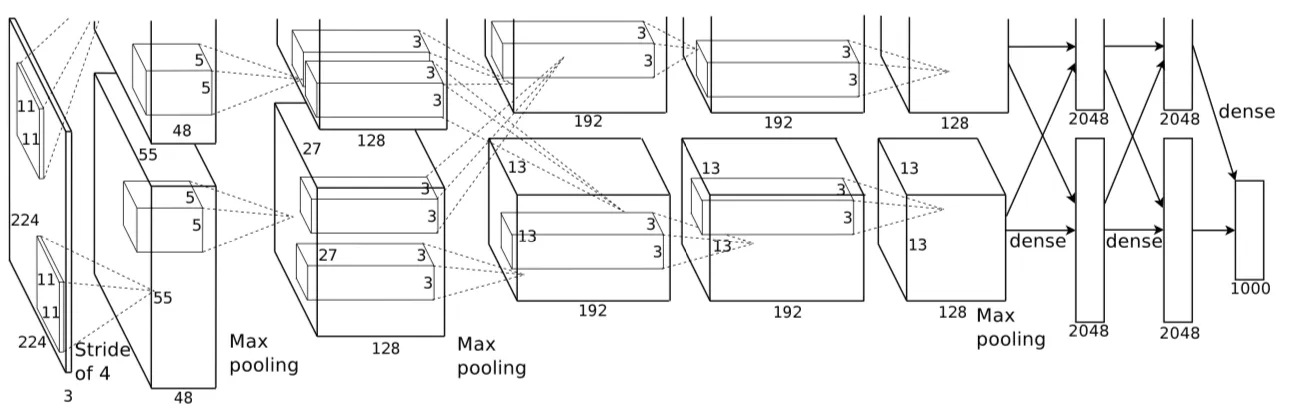

AlexNet

- Developed By: Alex Krizhevsky, Ilya Sutskever, Geoffrey Hinton, 2012.

- Key Features:

- Architecture: 8 layers including 5 convolutional layers followed by 3 fully connected layers.

- Activation Function: Uses ReLU (Rectified Linear Unit) activation function.

- Innovations: Introduced dropout for regularization and used GPU for training to handle large-scale datasets.

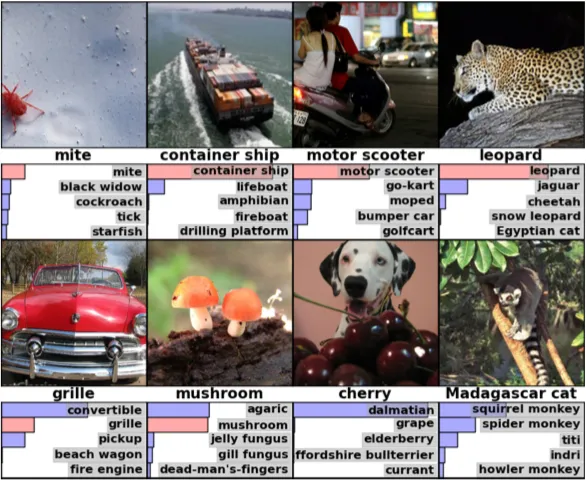

- Application: Achieved significant improvements in the ImageNet Large Scale Visual Recognition Challenge (ILSVRC).

- Significance: Marked the resurgence of interest in deep learning, showcasing the potential of CNNs in large-scale image classification tasks.

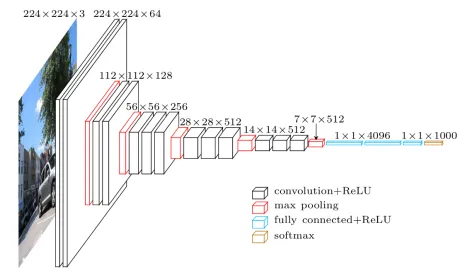

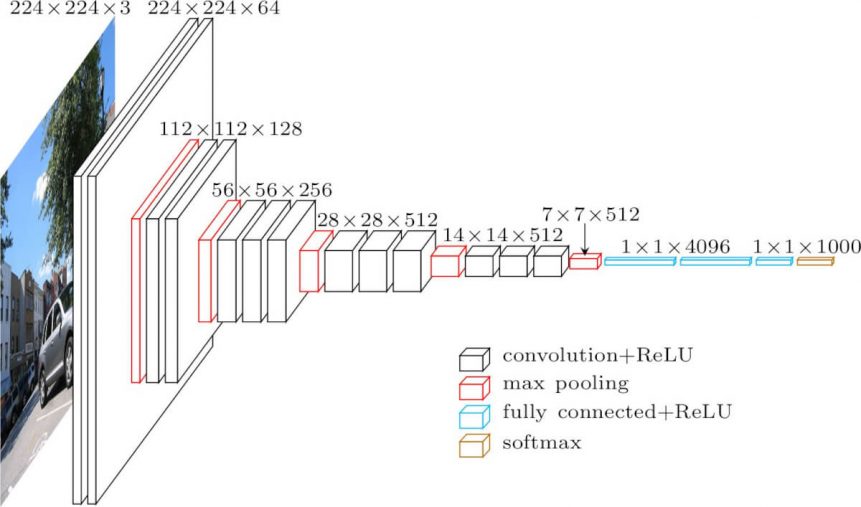

VGG-16

- Developed By: Visual Geometry Group (VGG) at the University of Oxford, 2014.

- Key Features:

- Architecture: 16 layers deep with 13 convolutional layers and 3 fully connected layers.

- Filters: Uses small 3x3 convolution filters throughout the network.

- Activation Function: Uses ReLU activation function.

- Application: Known for achieving high accuracy on the ImageNet dataset.

- Significance: Demonstrated that deep networks with a uniform architecture (repeated use of small filters) could achieve state-of-the-art performance.

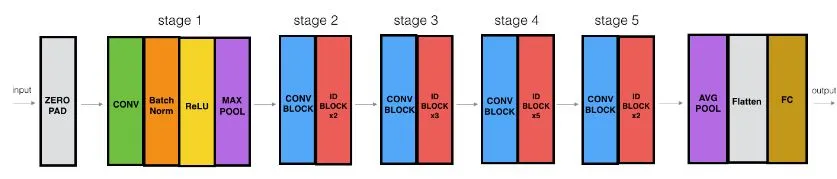

Residual Networks (ResNets)

- Developed By: Kaiming He, Xiangyu Zhang, Shaoqing Ren, Jian Sun, 2015.

- Key Features:

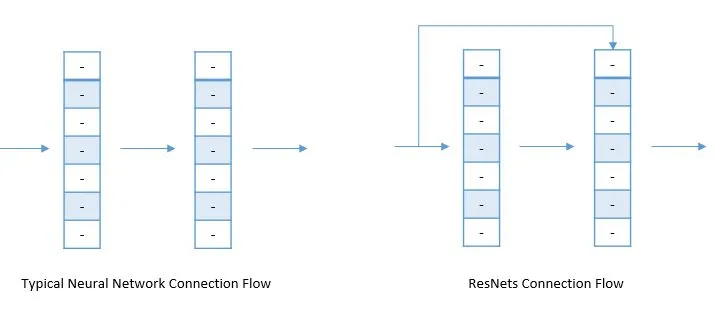

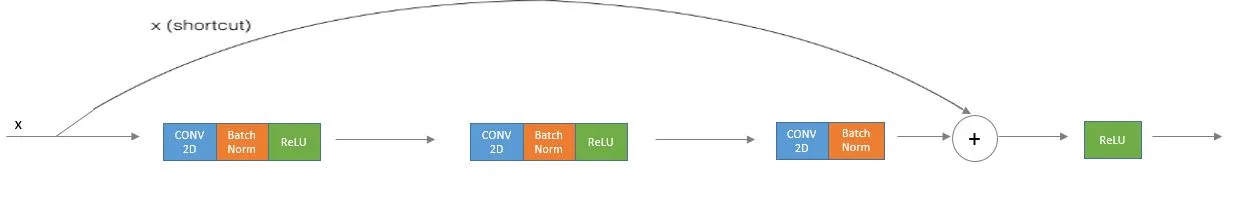

- Architecture: Utilizes residual blocks with skip connections to allow gradients to flow through deeper networks.

- Depth: Can be extremely deep, with variants like ResNet-50, ResNet-101, and ResNet-152.

- Activation Function: Uses ReLU activation function.

- Innovation: Skip connections help mitigate the vanishing gradient problem, enabling training of very deep networks.

- Application: Dominates image classification tasks and has been extended to various other domains.

- Significance: Revolutionized deep learning by enabling much deeper networks, significantly improving performance on various benchmarks.

Diagrams

- LeNet-5: Illustration of the 7-layer architecture.

- AlexNet: Diagram showing the 8-layer architecture with 5 convolutional layers and 3 fully connected layers.

- VGG-16: Illustration of the 16-layer architecture with repeated 3x3 convolution filters.

- ResNet: Diagram showing residual blocks with skip connections.

Links to Resources

Notes and Annotations

Summary of Key Points

- LeNet-5: Pioneered CNNs for digit recognition, introducing key concepts of convolution and pooling layers.

- AlexNet: Revived deep learning interest with significant performance improvements on large-scale datasets, introducing ReLU activation and GPU training.

- VGG-16: Showed the effectiveness of deep networks with a simple and uniform architecture using small convolution filters.

- ResNet: Enabled the training of very deep networks by introducing residual blocks and skip connections, solving the vanishing gradient problem.

Personal Annotations and Insights

- LeNet-5's simple yet effective architecture laid the groundwork for more complex CNNs.

- AlexNet's use of ReLU activation and GPU training revolutionized large-scale image classification, making deep learning practical.

- VGG-16's use of small filters throughout the network demonstrates that deep, uniform architectures can achieve high performance.

- ResNet's residual blocks are a breakthrough, allowing the training of networks with hundreds or even thousands of layers without degradation.

Backlinks

- Neural Network Architectures: Overview of how different architectures improve performance and handle challenges like vanishing gradients.

- Optimization Techniques: The role of innovations like ReLU activation and dropout in training deep networks.

- Image Processing Applications: Practical applications of CNN models in various image recognition and classification tasks.