Hopfield Network

Definition

A My-Blog/publish/1-Projects/New Notes/Hopfield Network is a form of recurrent artificial neural network invented by John Hopfield in 1982. It serves as an associative memory system with binary threshold nodes and is used for pattern recognition and Optimisation ProblemsOptimisation ProblemsBeyond Classical Search: Optimization Problems Definition Optimization problems in artificial intelligence involve finding the best solution from a set of feasible solutions. These problems are characterized by an objective function that needs to be maximized or minimized. Optimization techniques go beyond classical search methods by incorporating mathematical and heuristic strategies to efficiently explore the solution space. Key Concepts Objective Function**: A function that evaluates the.

Key Concepts

- Recurrent Neural Network: A type of neural network where connections between neurons form a directed cycle, allowing persistent internal states.

- Associative Memory: The ability of the network to recall a stored pattern when presented with a noisy or partial version of it.

- Energy Function: A scalar function representing the network's state, used to determine the stability and convergence of the network.

- Binary Threshold Nodes: Neurons in the network that have binary states (typically -1 or 1) and update their states based on the weighted sum of inputs.

- Symmetric Weight Matrix: The weight matrix in a Hopfield Network is symmetric, ensuring the network's energy decreases over time, leading to a stable state.

Detailed Explanation

A My-Blog/publish/1-Projects/New Notes/Hopfield Network is a form of recurrent neural network designed for associative memory tasks. It consists of a set of binary threshold neurons where each neuron is connected to every other neuron with symmetric weights (i.e., ( w_{ij} = w_{ji} )). The network proposed by Hopfield are known as Hopfield networks and it is his work that promoted construction of the first analog VLSI neural chip. Two types of networks are there:

- Discrete Hopfield NetworksDiscrete Hopfield NetworksIt is an auto-associative fully inter-connected single layer feedback network. 2. It is also symmetrically weighted network. 3. The network takes two valued inputs: binary (0, 1) or bipolar (+1, -1), the use of bipolar inputs make the analysis easier. 4. This transition process continues until no new, updated responses are produced and the network reaches its equilibrium.

- Continuous Hopfield Networks

Network Structure:

- Neurons: Each neuron has a binary state (usually -1 or 1) and updates its state based on the input from other neurons.

- Connections: Neurons are fully connected with each other, and the connections are represented by a weight matrix.

Energy Function: The energy function ( E ) of the network is defined as: $$ E = -\frac{1}{2} \sum_{i} \sum_{j} w_{ij} s_i s_j + \sum_{i} \theta_i s_i $$ where ( s_i ) is the state of neuron ( i ), ( w_{ij} ) is the weight between neuron ( i ) and ( j ), and ( \theta_i ) is the threshold of neuron ( i ). The network evolves to minimize this energy function, leading to stable states that represent stored patterns.

Training and Recall:

- Training: Patterns to be stored are encoded in the weight matrix using the Hebbian learning rule: [ w_{ij} = \frac{1}{N} \sum_{\mu=1}^{P} \xi_i^\mu \xi_j^\mu ] where ( \xi_i^\mu ) is the state of neuron ( i ) in pattern ( \mu ), and ( N ) is the number of neurons.

- Recall: When presented with a noisy or partial pattern, the network updates the states of neurons iteratively to converge to the closest stored pattern.

Applications:

- Pattern Recognition: Used for recognising and recalling patterns from noisy inputs.

- Optimisation: Solving combinatorial optimisation problems like the traveling salesman problem.

- Error Correction: Correcting errors in binary data transmission.

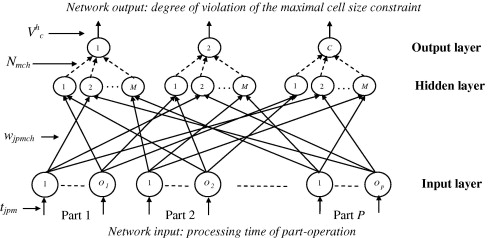

Diagrams

Links to Resources

- Hopfield Neural Network - GFG

- Hopfield Networks and Boltzman Machines - Part 1

- Hopfield Networks and Boltzman Machines - Part 2

- Hopfield Network Wikipedia

Notes and Annotations

-

Summary of key points:

- A Hopfield Network is a recurrent neural network used for associative memory tasks.

- It uses binary threshold neurons and a symmetric weight matrix.

- The network evolves to minimize its energy function, leading to stable states representing stored patterns.

-

Personal annotations and insights:

- Hopfield Networks highlight the importance of energy minimization in neural network dynamics.

- The concept of associative memory in Hopfield Networks is analogous to human memory, where partial information can trigger recall of the entire memory.

- Real-world applications of Hopfield Networks demonstrate the power of simple neural models in solving complex problems.

- Understanding Hopfield Networks provides insights into the foundational principles of more complex neural networks and machine learning algorithms.

Backlinks

- Linked from Unit III Associative LearningUnit III Associative LearningOverview Associative learning is the focus of this unit, which begins with an introduction to the concept and its significance in neural networks. You'll study Hopfield networks and their error performance, as well as simulated annealing processes. The unit covers Boltzmann machines and Boltzmann learning, including state transition diagrams and the problem of false minima. Stochastic update methods and simulated annealing are also discussed. Finally, the unit explores basic functional units of