Error Performance in Hopfield Networks

Definition

Error performance in Hopfield Networks refers to the network's ability to correctly recall stored patterns when presented with noisy or incomplete inputs. It measures the accuracy and reliability of the network in retrieving the correct memory patterns despite the presence of errors or disturbances.

Key Concepts

- Pattern Recall: The process of retrieving stored patterns from the network when given an initial noisy or partial input.

- Error Correction: The ability of the network to correct errors in the input and converge to the correct stored pattern.

- Capacity: The maximum number of patterns that can be reliably stored and recalled by the network.

- Noise Resilience: The network's robustness to noisy inputs and its ability to still recall the correct patterns.

- Convergence: The process through which the network settles into a stable state representing a stored pattern.

Detailed Explanation

Error performance in Hopfield Networks is crucial for their effectiveness in applications such as associative memory, pattern recognition, and optimization. Several factors influence the error performance, including the network's capacity, noise resilience, and convergence properties.

Pattern Recall and Error Correction:

- Initial State: When a noisy or partial input pattern is presented, the network updates the states of its neurons iteratively.

- Update Rule: Neurons update their states based on the weighted sum of inputs from other neurons, typically using a binary threshold function.

- Stable State: The network evolves to a stable state that corresponds to one of the stored patterns, ideally correcting any errors in the initial input.

Capacity:

- Storage Capacity: The number of patterns ( P ) that can be stored and reliably recalled depends on the number of neurons ( N ). The theoretical capacity of a Hopfield Network is approximately ( 0.15N ) patterns.

- Pattern Overlap: As the number of stored patterns increases, the likelihood of errors in recall increases due to overlap and interference between patterns.

Noise Resilience:

- Noise in Input Patterns: The network's ability to recall the correct pattern despite noisy inputs depends on the level of noise and the network's design.

- Robustness: Networks with higher noise resilience can correct more errors and recall the correct pattern even when the input is significantly corrupted.

Convergence:

- Energy Minimisation: The network minimizes its energy function during the recall process, converging to a local minimum that ideally represents a stored pattern.

- Attractors: Stable states of the network act as attractors, pulling the network towards stored patterns. Effective convergence ensures the network reliably reaches the correct attractor despite initial errors.

Performance Metrics:

- Error Rate: The proportion of incorrect recalls out of total recall attempts.

- Convergence Time: The time taken by the network to converge to a stable state.

- Noise Tolerance: The maximum level of noise in the input that the network can handle while still correctly recalling the pattern.

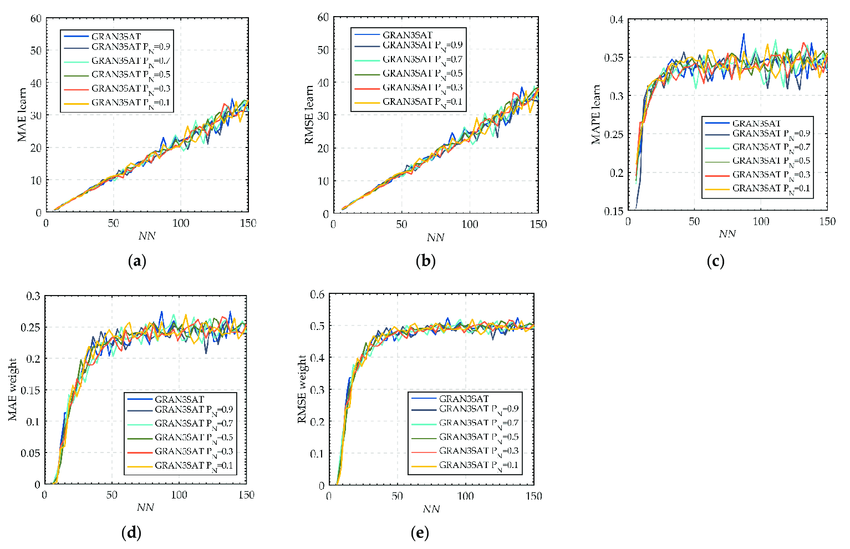

Diagrams

Links to Resources

Notes and Annotations

-

Summary of key points:

- Error performance measures the ability of Hopfield Networks to correctly recall stored patterns from noisy inputs.

- Key factors include pattern recall, error correction, capacity, noise resilience, and convergence.

- Effective error performance ensures reliable memory recall and robust pattern recognition.

-

Personal annotations and insights:

- Understanding error performance is essential for designing Hopfield Networks with high reliability and accuracy.

- Applications requiring robust pattern recognition, such as image processing and data retrieval, benefit from networks with low error rates and high noise tolerance.

- The balance between storage capacity and error performance is critical, as increasing capacity can lead to higher error rates due to pattern overlap.

- Further research and advancements in network design can enhance the error performance, making Hopfield Networks more effective for complex real-world tasks.

Notes and Annotations

- Summary of key points.

- Personal annotations and insights.

Backlinks

- Linked from Unit III Associative LearningUnit III Associative LearningOverview Associative learning is the focus of this unit, which begins with an introduction to the concept and its significance in neural networks. You'll study Hopfield networks and their error performance, as well as simulated annealing processes. The unit covers Boltzmann machines and Boltzmann learning, including state transition diagrams and the problem of false minima. Stochastic update methods and simulated annealing are also discussed. Finally, the unit explores basic functional units of